AI 组件库-MateChat 高级玩法:多会话(四)

- AI

- 2025-04-14 11:15:48

- 4145

MateChat 高级玩法:多会话

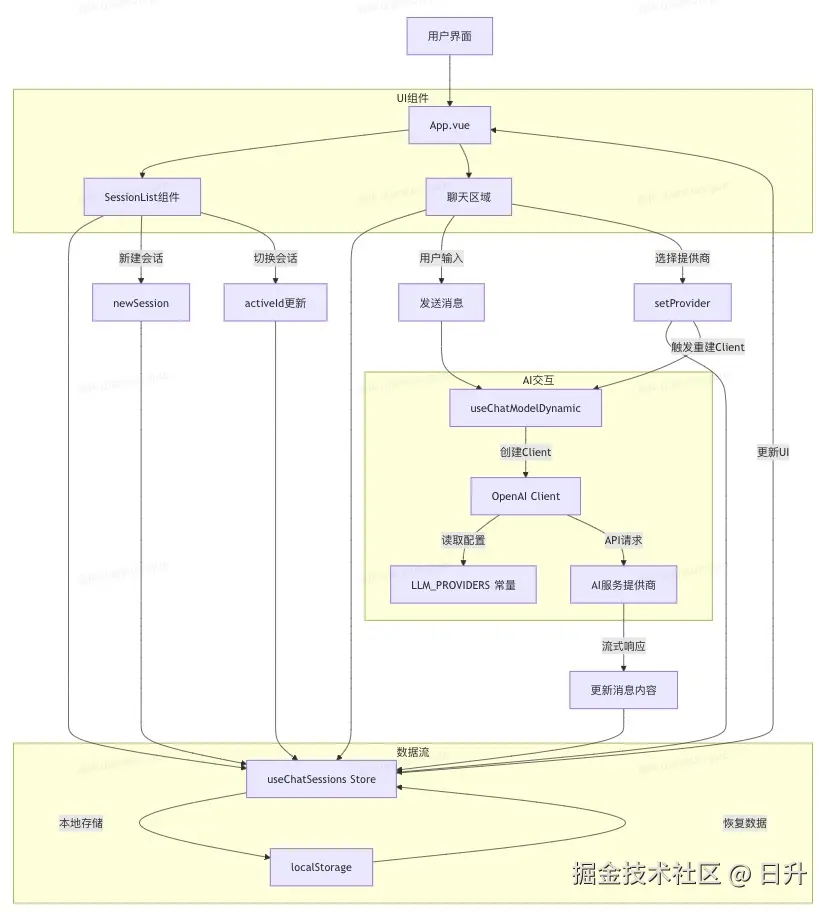

一、多会话架构

目标:像 GPT 一样左侧是会话列表,右侧是指定会话的 MateChat UI,且页面刷新后仍能找回所有历史。

1. 项目梳理

1.1. 需求梳理

构建一个基于Vue 3的聊天应用,支持与多种AI模型进行交互提供会话管理功能,包括创建新会话、切换会话和保存会话历史支持多种AI提供商(DeepSeek、OpenAI、通义千问)的接入和切换

1.2. 项目简单逻辑

使用Pinia进行状态管理,通过useChatSessions存储和管理会话数据实现了会话持久化功能,将聊天记录保存在localStorage中通过useChatModelDynamic实现了动态切换不同AI提供商的能力使用OpenAI SDK与各AI服务提供商的API进行通信,支持流式响应使用MateChat组件库构建聊天界面,包括消息气泡、输入框等实现了响应式布局,左侧固定宽度的会话列表和右侧自适应的聊天区域

1.3. 技术栈和依赖

前端框架:Vue 3 + TypeScript状态管理:PiniaUI组件:Naive UI和MateChat Core工具库:dayjs(日期处理)、nanoid(ID生成)API客户端:OpenAI SDK构建工具:Vite

2. 添加依赖

"dependencies": {

"@devui-design/icons": "^1.4.0",

"@matechat/core": "^1.5.2",

"dayjs": "^1.11.13",

"naive-ui": "^2.41.1",

"nanoid": "^5.1.5",

"openai": "^5.1.1",

"pinia": "^3.0.3",

"vue": "^3.5.13",

"vue-devui": "^1.6.32"

},

"devDependencies": {

"@vitejs/plugin-vue": "^5.2.3",

"@vue/tsconfig": "^0.7.0",

"typescript": "~5.8.3",

"vite": "^6.3.5",

"vue-tsc": "^2.2.8"

}3. 目录结构

src ├── App.vue ├── components │ └── SessionList.vue ├── constants │ └── llmProviders.ts ├── hooks │ └── useChatModelDynamic.ts ├── main.ts ├── stores │ └── useChatSessions.ts

4. 详细代码

4.1. main.ts

import { createApp } from 'vue'

import './style.css'

import App from './App.vue'

import { createPinia } from 'pinia'

import naive from 'naive-ui'

import MateChat from '@matechat/core';

import '@devui-design/icons/icomoon/devui-icon.css'; // 图标库

const pinia = createPinia()

createApp(App).use(MateChat).use(pinia).use(naive).mount('#app')4.2. App.vue

点击左侧「+」新建会话input = v"

@submit="submit"

> store.active);

/* 输入框内容 */

const input = ref("");

/* 下拉选择的 provider */

const provider = computed({

get: () => activeSession.value?.provider ?? "deepseek",

set: (v) => store.setProvider(v as any),

});

/* 自动聚焦最新会话 */

watch(

() => store.sessions.length,

(v) => {

if (v && !store.activeId) store.activeId = store.sessions[0].id;

}

);

/* 发送 */

function submit(text: string) {

if (!text.trim() || !activeSession.value) return;

send(text).catch((err) => alert(err.message));

input.value = "";

}

/* 头像 */

const userAvatar = {

imgSrc: "https://matechat.gitcode.com/png/demo/userAvatar.svg",

};

const modelAvatar = { imgSrc: "https://matechat.gitcode.com/logo.svg" };" _ue_custom_node_="true">4.3. SessionList.vue

会话新增会话{{ s.title }} {{ dayjs(s.updatedAt).fromNow() }}4.4. llmProviders.ts

export const LLM_PROVIDERS = {

deepseek: {

label: "DeepSeek",

baseURL: "https://api.deepseek.com",

model: "deepseek-reasoner",

apiKey: "sk-xxx",

},

openai: {

label: "OpenAI",

baseURL: "https://api.openai.com/v1",

model: "gpt-4o",

apiKey: "sk-xxx",

},

qwen: {

label: "Qwen · 通义千问",

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1",

model: "qwen-max",

apiKey: "sk-xxx",

},

} as const;

export type ProviderName = keyof typeof LLM_PROVIDERS;

export const providerOptions = Object.entries(LLM_PROVIDERS).map(

([value, { label }]) => ({ label, value })

);4.5. useChatModelDynamic.ts

import OpenAI from "openai";

import { watch } from "vue";

import { LLM_PROVIDERS } from "../constants/llmProviders";

import { useChatSessions } from "../stores/useChatSessions";

export function useChatModelDynamic() {

const store = useChatSessions();

let client = createClient();

watch(

() => [store.activeId, store.active?.provider],

() => {

client = createClient();

}

);

function createClient() {

const p = store.active?.provider || "deepseek";

const cfg = LLM_PROVIDERS[p];

return new OpenAI({

baseURL: cfg.baseURL,

apiKey: cfg.apiKey,

dangerouslyAllowBrowser: true,

});

}

async function send(text: string) {

store.pushMessage({ from: "user", content: text });

store.pushMessage({ from: "model", content: "", loading: true });

const idx = store.active!.messages.length - 1;

const cfg = LLM_PROVIDERS[store.active!.provider];

const stream = await client.chat.completions.create({

model: cfg.model,

messages: [{ role: "user", content: text }],

stream: true,

});

store.active!.messages[idx].loading = false;

for await (const chunk of stream) {

store.active!.messages[idx].content +=

chunk.choices[0]?.delta?.content || "";

}

}

return { send };

}4.6. useChatSessions.ts

import { defineStore } from "pinia";

import { nanoid } from "nanoid";

export interface ChatMessage {

id: string;

from: "user" | "model";

content: string;

loading?: boolean;

createdAt: number;

}

export interface ChatSession {

id: string;

title: string;

provider: "deepseek" | "openai" | "qwen";

messages: ChatMessage[];

createdAt: number;

updatedAt: number;

}

export const useChatSessions = defineStore("chatSessions", {

state: () => ({

sessions: [] as ChatSession[],

activeId: "",

}),

getters: {

active(state) {

return state.sessions.find((s) => s.id === state.activeId);

},

},

actions: {

newSession(

title = "新会话",

provider: ChatSession["provider"] = "deepseek"

) {

const id = nanoid();

this.sessions.unshift({

id,

title,

provider,

messages: [],

createdAt: Date.now(),

updatedAt: Date.now(),

});

this.activeId = id;

this.persist();

},

pushMessage(partial: Omit) {

const s = this.active;

if (!s) return;

s.messages.push({ id: nanoid(), createdAt: Date.now(), ...partial });

s.updatedAt = Date.now();

this.persist();

},

setProvider(provider: ChatSession["provider"]) {

if (this.active) {

this.active.provider = provider;

this.persist();

}

},

persist() {

localStorage.setItem("matechat-sessions", JSON.stringify(this.sessions));

},

restore() {

const raw = localStorage.getItem("matechat-sessions");

if (raw) this.sessions = JSON.parse(raw);

if (!this.activeId && this.sessions.length)

this.activeId = this.sessions[0].id;

},

},

});5. 流程图

5.1. markdown

graph TD A[用户界面] --> B[App.vue] B --> C[SessionList组件] B --> D[聊天区域] %% 状态管理流程 C --> E[useChatSessions Store] D --> E E -->|本地存储| F[localStorage] F -->|恢复数据| E %% 消息处理流程 D -->|用户输入| G[发送消息] G --> H[useChatModelDynamic] H -->|创建Client| I[OpenAI Client] I -->|读取配置| J[LLM_PROVIDERS 常量] I -->|API请求| K[AI服务提供商] K -->|流式响应| L[更新消息内容] L --> E %% 会话管理流程 C -->|新建会话| M[newSession] C -->|切换会话| N[activeId更新] M --> E N --> E E -->|更新UI| B %% 提供商切换 D -->|选择提供商| O[setProvider] O --> E O -->|触发重建Client| H %% 子图:数据流 subgraph 数据流 E F end %% 子图:UI组件 subgraph UI组件 B C D end %% 子图:AI交互 subgraph AI交互 H I J K end

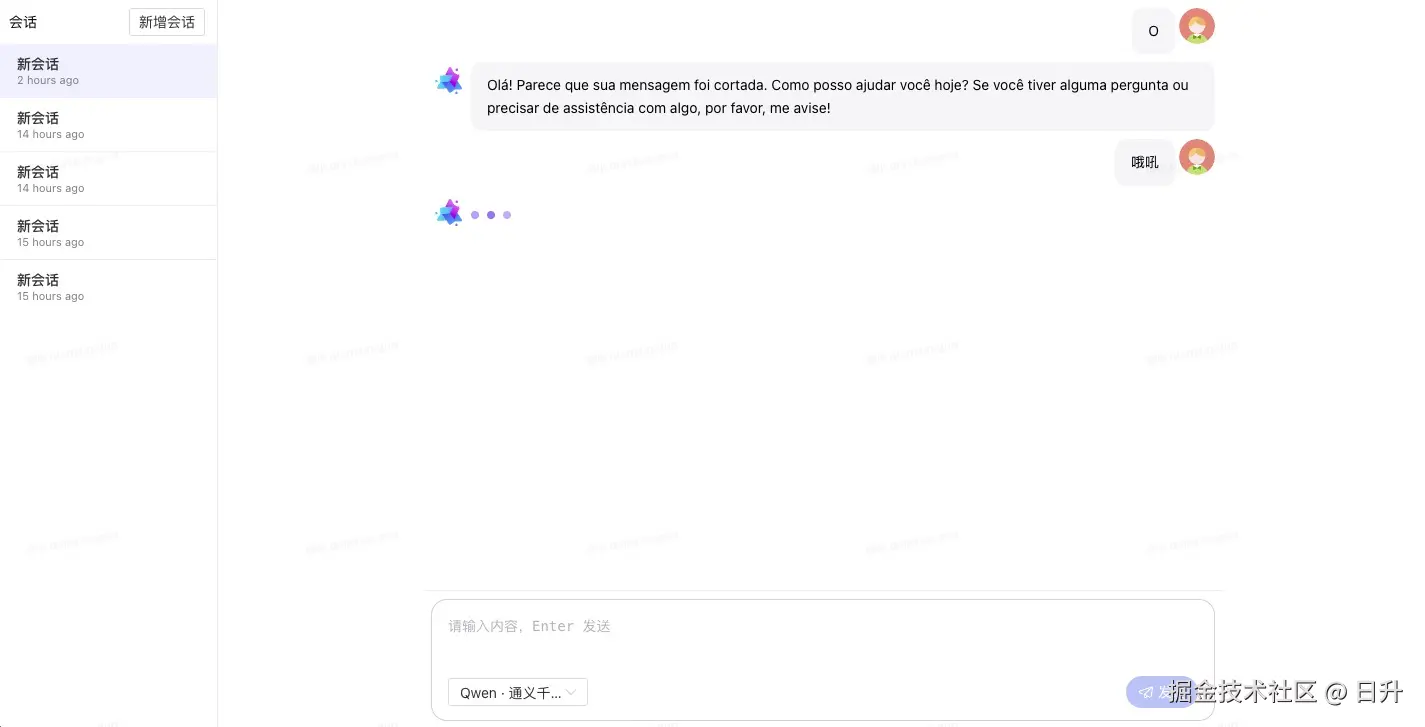

6. 页面 UI

二、总结

用 Pinia Store + LocalStorage / IndexedDB;provider 与 messages 同级存储Pinia + IndexedDB:消息分片分页存储;内存仅留当前分页会话分栏:左侧 SessionList、右侧 McLayout

有话要说...